The Valley of AI Trust

As a researcher at the intersection of Robotics and Machine Learning, the most surprising shift over my five years in the field is how quickly people have warmed to the idea of having AI impact their lives. Learning thermostats are becoming increasingly popular (probably good), digital voice assistants pervade our technology (probably less good), and self-driving vehicles populate our roads (about which I have mixed feelings). Along with this rapid adoption, fueled largely by the hype associated with artificial intelligence and recent progress in machine learning, we as a society are opening ourselves up to risks associated with using this technology in circumstances it is yet unprepared to deal with. Particularly for safety-critical applications or the automation of tasks that can directly impact quality of life, we must be careful to avoid what I call the valley of AI trust—the dip in overall safety caused by premature adoption of automation.

In light of the potential risks, the widespread adoption of AI is perhaps surprising at first glance. Even as late as two years ago, researchers and technologists were predicting that we would need massive performance improvements in AI and the capabilities of autonomous systems before humans would become comfortable with them:

[…] the probability of a fatality caused by an accident per one hour of (human) driving is known to be $10^{−6}$. It is reasonable to assume that for society to accept machines to replace humans in the task of driving, the fatality rate should be reduced by three orders of magnitude, namely a probability of $10^{−9}$ per hour [1].

[1] This estimate is inspired from the fatality rate of air bags and from aviation standards. In particular, $10^{−9}$ is the probability that a wing will spontaneously detach from the aircraft in mid air.

This three-orders-of-magnitude improvement threshold for acceptance has not been substantiated by reality, made obvious by the relatively widespread use of Tesla’s autopilot feature.

So how did we get here? Why are we seeing increasingly widespread acceptance of AI technologies despite the potential for risk?

Corporate financial incentives clearly play a role, since there is money to be made by being the first companies to provide a certain AI service or automating consumer-facing elements, the rise of digital chatbots for customer service a notable example. But I might argue that deeper effects are at play. Consumers are often choosing to use these technologies, and Amazon and Facebook are pushing quite hard to win over our affection (though why anyone would pay money to add a Facebook-brand camera in their living room is beyond me). The issue is that most consumers lack an understanding of how much they can trust these automated systems to make effective decisions and under what conditions they might fail.

The hype surrounding “AI” these days certainly is tipping the scales in a potentially dangerous direction. Many big tech companies, including Google, Microsoft, and Amazon Web Services, have positioned themselves as AI companies and their marketing preaches the idea the future they envision this technology will bring is very nearly upon us. In many ways, this narrative is supported by the incredible rate of progress in the research community over the last eight years—AI has achieved superhuman performance at tasks like language translation, playing the game of Go, and object detection, and has enabled mind-bending applications ranging from generative art to modeling protein folding. Referencing its potential for transformative impact, Andrew Ng has even been so ambitious as to call AI “the new electricity”. The hype surrounding the incredible capabilities of AI is so strong that a nontrivial portion of the conversation surrounding machine learning in the popular media is devoted to the AI Superintelligence. As a researcher, I occasionally get asked How much should we be worried about AI taking over? One thing I always make clear to people: AI is a lot less intelligent than you think.

For the forseeable future, society is substantially more likely to overestimate AI than it is to underestimate its capabilities. Progress on the specific applications that have captured public imagination does not mean that these individual systems can be composed into an AI capable of rivaling human performance in general. IBM Watson is a poignant example of a system that illustrates this failing. Watson failed to deliver upon its promises after being welcomed into hospitals to discover connections between patient symptoms that medical staff may have missed. However, The system made a number of dangerous predictions and recommendations that, if trusted implicitly, could have resulted in serious harm to patients.

The popular media also has a problematic tendency to overhype AI technologies as well, a point covered in a fantastic article in The Guardian entitled “The discourse is unhinged”.

The popular media also has a problematic tendency to overhype AI technologies as well, a point covered in a fantastic article in The Guardian entitled “The discourse is unhinged”.

In doing some deeper research on Watson I should point out that IBM has put out a statement that asserts they are “100% focused on patient safety.” For all the system’s shortcomings, I do not doubt that the researchers and engineers value human life.

In doing some deeper research on Watson I should point out that IBM has put out a statement that asserts they are “100% focused on patient safety.” For all the system’s shortcomings, I do not doubt that the researchers and engineers value human life.

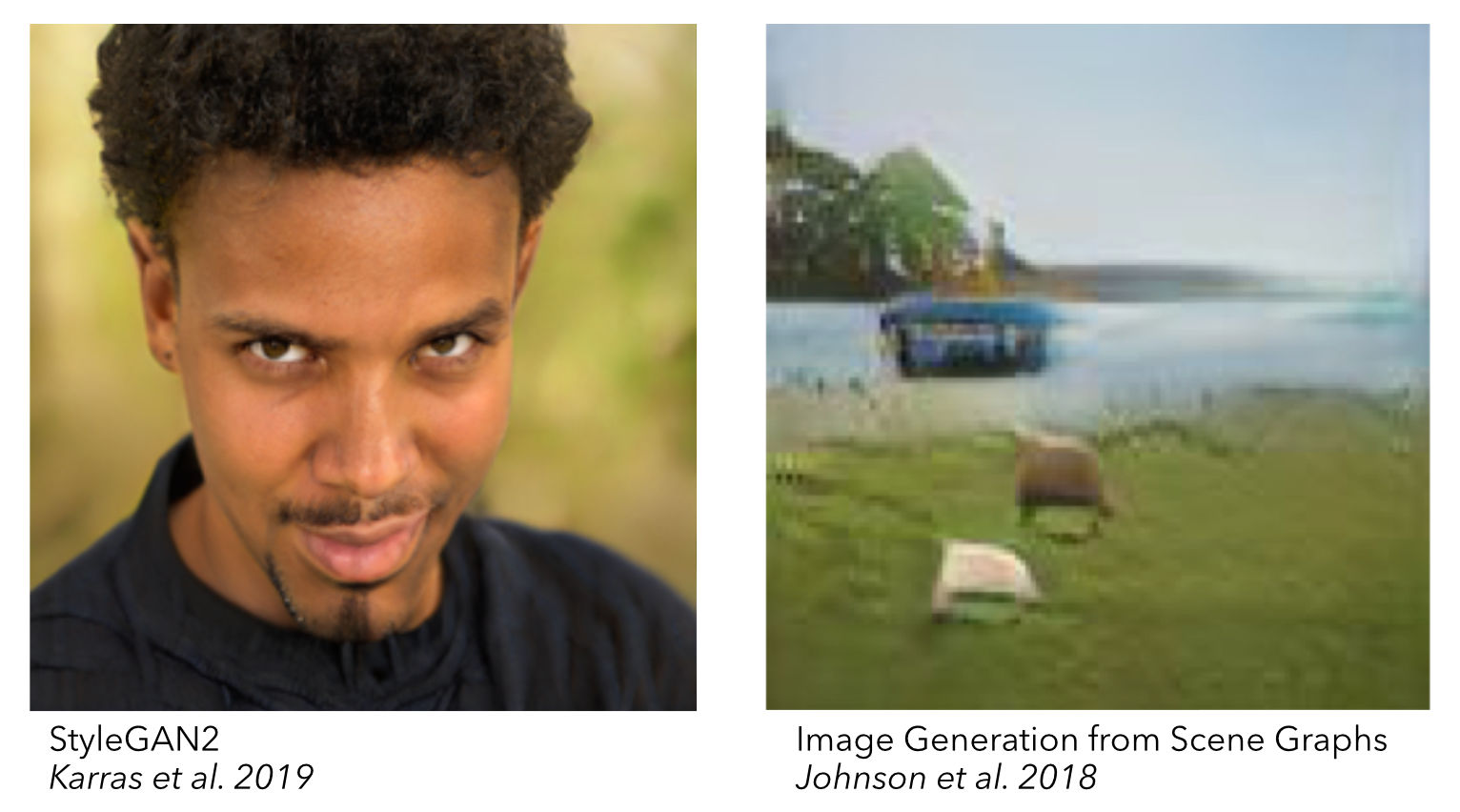

Despite the hype, there remains a lack of clear delineation between tasks that AI is good at and those on which it is catastrophically bad. For example, consider the task of image generation that has received nontrivial popular attention in the last year or so. Between being asked to generate a “random human face” versus being asked to create an image of “A sheep by another sheep standing on the grass with the sky above and a boat in the ocean by a tree behind the sheep.”, on which might you expect that system to do a better job? Perhaps generating faces might be harder, because humans are incredibly discerning when identifying “normal looking” faces. Perhaps, the specificity of the sheep example makes it a more challenging task. The results speak for themselves:

So why is the seep example perceptually much worse? The volume of query-specific data required to train the system plays a non-trivial role, since the face generation system has access to many thousands of high-resolution faces, yet the scene generation system is provided with a much more diverse set of images and accompanying natural language descriptions; if even any of those images contained a field with precisely two sheep in it, I would be surprised. In light of this change of perspective, these results are actually quite impressive, since the system generated an image that was potentially completely unlike any image it had ever seen before. However, understanding how and why these systems might perform poorly on a particular task often requires a deep, nuanced understanding of the AI system and the data used to train it. For the average consumer, this level of understanding should not be a requirement.

If you want to better understand the sheep example I highly recommend you read the Image Generation from Scene Graphs paper from which this result is taken. I have done a poor job here of emphasizing how impressive it is that an AI can be made to generate custom images from complex inputs.

If you want to better understand the sheep example I highly recommend you read the Image Generation from Scene Graphs paper from which this result is taken. I have done a poor job here of emphasizing how impressive it is that an AI can be made to generate custom images from complex inputs.

To be clear, there are spaces in which AI is already purportedly improving safety: Tesla’s self-published safety report even claims significant safety improvements when using its “autopilot”, though the reality is perhaps more nuanced. Regardless, claims from Elon Musk that full autonomy is coming to Tesla’s vehicles as early as late 2020 is likely a misleading prediction, one that lends false credibility to the idea that quick progress on highway driving or parking lot navigation implies that the same technology will just-as-quickly enable full Level 5 autonomy. For example, driving on the highway is relatively easy, though merging onto an off-ramp is a much more challenging problem, because it requires more reliable predictions of the behavior of the other cars on the road and deeper knowledge of social convention.

So where does this supposed boundary exist? On what tasks should we trust AI? It is difficult to give general instruction about where AI may fail because rarely is the answer to these questions obvious. This deficiency is in part because even the research community often does not know where the lines are. There exist many open questions about the potential capacity for AI to revolutionize our everyday experience, and bold claims of its transformative power are difficult to refute when clear answers do not exist. Additionally, AI often fails in ways that humans do not expect, because its internal representation of the problem or of the data it uses for training is quite different from ours.

In light of these difficulties, our attitude towards new AI systems should be straightforward: be skeptical. We are on the cusp of a future in which AI will augment human capacity. Yet, for now, we need to ensure that these technologies are advertised in a way that makes clear what they can and cannot do and what it looks like when they fail. Trust should be earned, and must be re-earned when the scope of automation increases.

As always, I welcome discussion in the comments below or on Hacker News.

References

- Shai Shalev-Shwartz, Shaked Shammah & Amnon Shashua, On a Formal Model of Safe and Scalable Self-driving Cars, arXiv preprint arXiv:1708.06374, 2017.

- Justin Johnson, Agrim Gupta & Li Fei-Fei, Image Generation from Scene Graphs, in: Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Tero Karras et al., Analyzing and Improving the Image Quality of StyleGAN, arXiv preprint arXiv:1912.04958, 2019.