Machine Learning & Robotics: My (biased) 2019 State of the Field

At the end of every year, I like to take a look back at the different trends or papers that inspired me the most. As a researcher in the field, I find it can be quite productive to take a deeper look at where I think the research community has made surprising progress or to identify areas where, perhaps unexpectedly, we did not advance.

Here, I hope to give my perspective on the state of the field. This post will no doubt be a biased sample of what I think is progress in the field. Not only is covering everything effectively impossible, but my views on what may constitute progress may differ from yours. Hopefully all of you reading will glean something from this post, or see a paper you hadn’t heard about. Better yet, feel free to disagree: I’d love to discuss my thoughts further and hear alternate perspectives in the comments below or on Hacker News.

As Jeff Dean points out, there are roughly 100 machine learning papers posted to the Machine Learning ArXiv per day!

As Jeff Dean points out, there are roughly 100 machine learning papers posted to the Machine Learning ArXiv per day!

From AlphaZero to MuZero

AlphaZero was one of my favorite papers from 2017. DeepMind’s world-class Chess- and Go-playing AI got a serious upgrade this year in the form of MuZero, which added Atari Games to its roster of super-human-performing tasks. Atari had previously been out of reach for AlphaZero because the observation space is incredibly large, making it difficult for AlphaZero to build a tree of actions and the outcomes that result. In Go, predicting the outcome of an action is easy, since the board follows a set of rules about what it will look like after taking an action. For Atari, however, predicting the outcome of an action in principle requires predicting what the next frame will look like. This very high-dimensional state space and hard-to-define observation model creates challenges when the system tries to estimate the impact of its actions a mere few frames into the future.

MuZero circumvents this problem by learning a latent (low-dimensional) representation of the state space, including the current frame, and then plans in that learned space. With this shift, taking an action moves around in this compact latent space, allowing the agent to imagine the impact of many different actions and evaluate the tradeoffs that may occur, a hallmark feature of the Monte-Carlo Tree Search (MCTS) algorithm upon which both AlphaZero and MuZero are based. This approach feels much more like what I might expect from a truly intelligent decision-making system: the ability to weigh different options and to do so without the need to predict precisely what the world will look like upon selecting each. Of course, the complication here is how they simultaneously learn this latent space while also learning to plan in it, but I’ll refer you to their paper for more details.

Another honorable mention in this space is Facebook AI’s Hanabi playing AI, in which the system needed to play a cooperative, partially observable card game.

Another honorable mention in this space is Facebook AI’s Hanabi playing AI, in which the system needed to play a cooperative, partially observable card game.

What really struck me about this work is how it composes individual ideas into a larger working system. This paper is as much of a systems paper as any machine learning work I’ve seen, but beyond the dirty tricks that perennially characterize neural network training, the ideas presented in MuZero help answer deep questions about how one might build AI for increasingly complex problems. We are, as a community, making progress towards composing individual ideas to create more powerful decision-making systems. Both AlphaZero and MuZero make progress in this direction, recognizing that the structure of the MCTS tree-building (to simulate the impact of selecting different actions) coupled with the ability to predict the future goodness of each action would result in more powerful learning systems. MuZero’s addition of a learned compact representation (effectively a model of the system’s dynamics) in which actions and subsequent observations can be simulated for the purposes of planning, gives me hope that such systems may one day be able to tackle real-world robotics problems. As we strive to make increasingly intelligent AI, this work moves us in the direction of better understanding what ideas and tools are likely to bring about that reality.

See this post of mine for a discussion of what’s still missing for AlphaZero and MuZero to approach real-world problems

See this post of mine for a discussion of what’s still missing for AlphaZero and MuZero to approach real-world problems

Great work (as always) from the folks at DeepMind!

Representation Learning (Long Live Symbolic AI)

Perhaps the area of progress I am most excited to see is in the space of Representation Learning. I’m a big fan of old-school classical planning and so-called symbolic AI, in which agents interface with the world by thinking about symbols, like objects or people. Humans do this all the time, but in translating our capacity to robotic or artificially intelligent agents, we often have to specify what objects or other predicates we want the agent to reason about. But a question that has largely eluded precise answers is Where do symbols come from? and more generally: How should we go about representing the world so that the robot can make quick and effective decisions when solving complex, real-world problems?

Some recent work has started to make real progress towards being able to learn such representations from data, enabling learned systems to infer objects on their own or to build a “relation graph” of objects and places that they can use to interact with a never-before-seen location. This research is yet young, but I am eager to see it progress, since I am largely convinced that progress towards more capable robotics will require deeper understanding and significant advances in this space. A couple of papers I’ve found particularly interesting include:

- Entity Abstraction in Visual Model-Based Reinforcement Learning This paper is one of a handful of works recently trying to structure the learning problem in a way such that the system learns what objects are and can then forward simulate the behavior of those objects using a learned model of their dynamics. From the paper: “OP3 enforces the entity abstraction, factorizing the latent state into local entity states, each of which are symmetrically processed with the same function that takes in a generic entity as an argument.” This line of work is in its infancy, but I look forward to seeing how the community will continue to investigate using novel structures for learning that encourage the system to tease out entities of interest that can then be used in subsequent planning pipelines.

Here is an example from the Entity Abstraction paper, showing how this process can be used to make predictions about the future: #[image===entity-abstraction-example]#

Here is an example from the Entity Abstraction paper, showing how this process can be used to make predictions about the future: #[image===entity-abstraction-example]#

- Bayesian Relational Memory for Semantic Visual Navigation This paper involves building a topological map online as an agent navigates in search of a semantic goal—e.g., find the kitchen. As it navigates, it periodically identifies new rooms and adds them to its growing relation graph when it becomes sufficiently confident. Everything here is done from vision, meaning that the system has to deal with considerable uncertainty and high-dimensional inputs. This paper has some similar ideas to an influential paper from ICLR 2018: Semi-parametric Topological Memory for Navigation, which required a prior demonstration of the environment to build its map.

I look forward to seeing how the community will continue to blur the lines between model-based and model-free techniques in the next couple of years. More generally, I’d like to see more progress at the intersection of symbolic AI and more “modern” deep learning approaches to tackle problems of interest to the robotics community—e.g., vision-based map-building, planning under uncertainty, and life-long learning.

Supervised Computer Vision Research Cools (somewhat)

That’s not to say that work in this space isn’t important, but since Mask-RCNN from Facebook Research made waves in early 2018, I haven’t been particularly inspired by research in this space. Progress on tasks like semantic segmentation or object detection have matured considerably. The ImageNet challenge for object detection has largely faded into the background as only companies (who often have superior datasets or financial resources) really bother to try to top the leaderboard in related competitions.

But this isn’t a bad thing! In fact, it’s a particularly good time to be a robotics researcher, since the community has reached a point at which we have eeked as much performance as we can out of the datasets we have available to us and have started to focus more on widespread adoption of these tools and the “convenience features” associated with that process. There are now a variety of new techniques being applied to train these systems more quickly and, more importantly, to make them faster and more efficient without compromising accuracy. As someone who is interested in real-world and often real-time use of these technologies—particularly on resource-constrained systems like smartphones and small autonomous robots—I find I am particularly interested in this line of research, which will enable more widespread adoption of these tools and the capabilities they enable.

Of note has been some cool work on network distillation, in which optimization techniques are used after training to remove portions of a neural network that have little bearing on overall performance (and therefore do little more than increase the amount of computation). Though it has not yet seen widespread practical impact, the The Lottery Ticket Hypothesis paper has some fascinating ideas about how to initialize and train small neural networks that circumvent the need for pruning. This “Awesome” GitHub page has as complete a list as I’ve seen on different approaches to network pruning. A related technology of interest is network compilation in which hardware-specific functions are used to further accelerate evaluation; the FastDepth paper is a good example of using a combination of these techniques on the task of monocular depth estimation.

Maturing Technologies

Though new techniques and domains can be exciting, I am at least as interested in seeing what technologies start to slow down. Many areas of research become most interesting when they cross the point at which most of the low-hanging fruit has been picked, leading to investigations into deeper questions as the real challenges stymieing the field become clear. As someone who does research at the intersect of robotics and machine learning, I find that it is often at this point that the technologies become sufficiently robust that one might trust them to inform decision-making on actual hardware.

Graph Neural Networks

I am a huge fan of Graph Neural Networks. Ever since the paper Relational inductive biases, deep learning, and graph networks came out, I have been thinking deeply about how to integrate GNN’s as a learning backend for my own work. The general idea is quite elegant: build a graph in which the nodes correspond to individual entities—objects, regions of space, semantic locations—and connect them to one another according to their ability to impact each other. In short, the idea was to impose as much structure on the problem of interest where you could most easily define it and then let deep neural networks take care of learning the relationships between entities according to that structure (similar in concept to some of the work I discussed above in Representation Learning).

Graphical models have been used in AI for decades, but the problem of how to process high-dimensional observations created a bottleneck that, for a time, only hand-crafted features seemed to be able to overcome. But with GNN’s, high-dimensional inputs became a feature rather than a bug, and last year we saw an explosion of tools for using GNN’s for accomplishing interesting tasks that have proven challenging for other learning representations, like Quantum Chemistry.

This year, as tools for building and using Graph Nets matured, researchers started to apply GNNs to their own problems, leading to some interesting work at the intersection of machine learning and robotics (a place I tend to call home). I am particularly interested in robots capable of making good navigation decisions (especially when they only have incomplete knowledge about their surroundings) and a few papers—particularly Autonomous Exploration Under Uncertainty via Graph Convolutional Networks and Where are the Keys? by Niko Sünderhauf—have proven quite thought-provoking.

If you are interested in playing around with Graph Neural Networks, I would recommend you take the plunge via a Collaboratory Notebook provided by DeepMind in which you can play around with a bunch of demos.

If you are interested in playing around with Graph Neural Networks, I would recommend you take the plunge via a Collaboratory Notebook provided by DeepMind in which you can play around with a bunch of demos.

Explainable & Interpretable AI

As excited as I am by the promise of deep learning and approaches to representation learning, the systems that result from these techniques are often opaque, a problematic property as such systems are increasingly human-facing. Fortunately, there has been increased attention and progress in the space of Explainable and Interpretable AI and, in general, working towards AI that humans might be comfortable trusting and co-existing with.

Relatedly: though it came out in 2018, I read the book Automating Inequality this past year and think that it should be required reading for all AI researchers so that we might think more critically about how the decisions we make (often unilaterally) have downstream consequences when these systems are deployed in real-world settings.

Relatedly: though it came out in 2018, I read the book Automating Inequality this past year and think that it should be required reading for all AI researchers so that we might think more critically about how the decisions we make (often unilaterally) have downstream consequences when these systems are deployed in real-world settings.

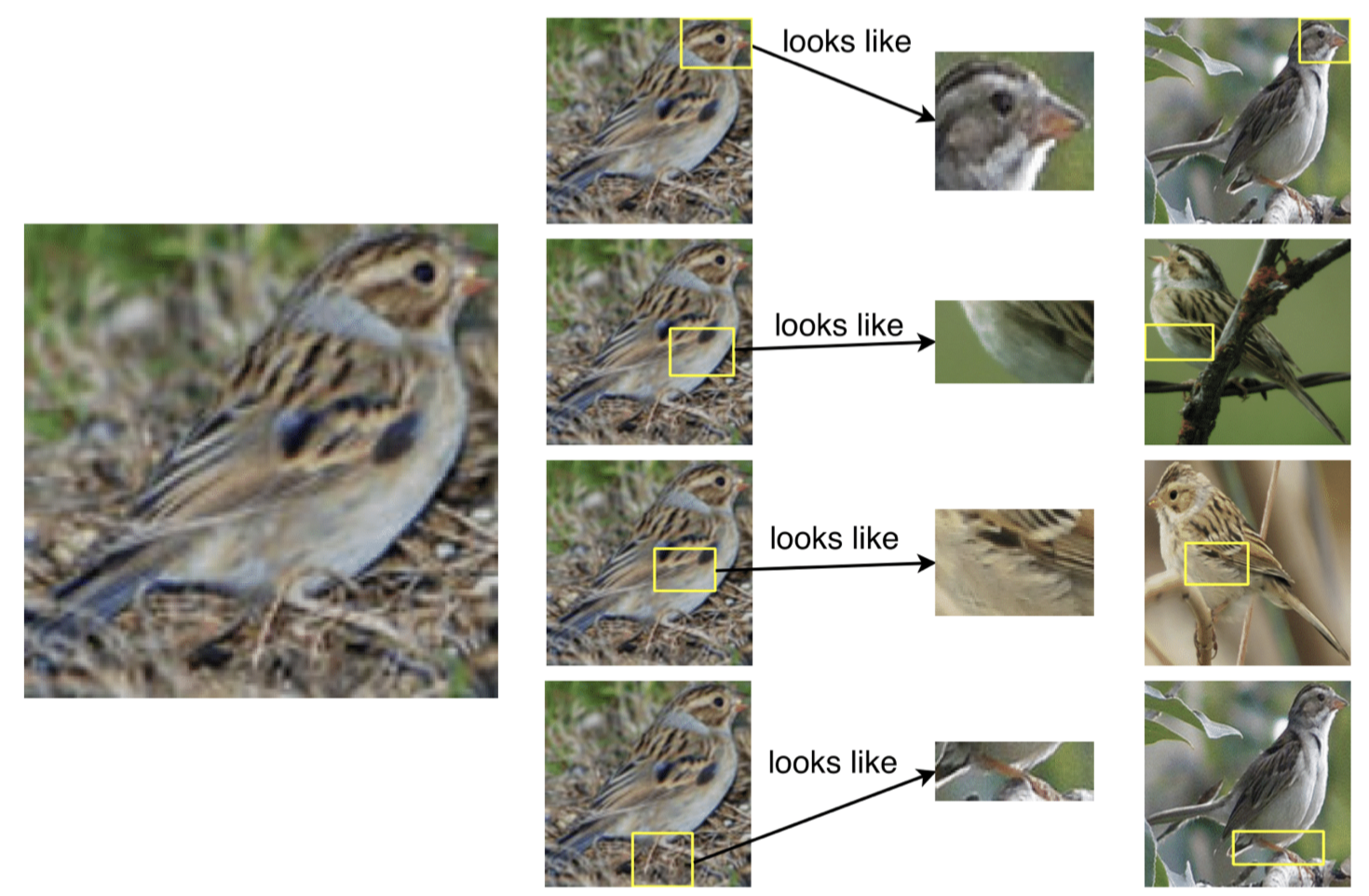

One of the more interesting papers in the vein of interpretable AI that caught my eye recently is This Looks Like That: Deep Learning for Interpretable Image Recognition by Chaofan Chen and Oscar Li from Cynthia Rudin’s lab at Duke. In the paper, the authors set up an image classification pipeline that works by identifying which regions of the current image match similar regions in other images and matching the classification between the two. The classification is therefore more interpretable than that of other competitive techniques since it, by design, provides a direct comparison to similar images and features from the training set. Here is an image from the paper showing how the system classified an image of a clay colored sparrow:

Cynthia Rudin this year also published her well-known work: Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. In it, she argues that we should stop “explaining” (posthoc) decisions made by black-box models, and should instead be building models that are interpretable by construction. While I don’t know that I necessarily agree that this should be cause to immediately stop using black-box models, she makes some well-reasoned points in her paper. In particular, her voice is of critical importance to a field dominated by the development of black-box models.

There has also been some good work this past year, like Explaining Explanations to Society (by some friends and colleagues of mine, Leilani H. Gilpin and Cecilia Testart et al.), that focus on broader questions associated with understanding what types of explanations are most useful for society and how we might overcome the limitations of existing Deep Learning systems to extract such outputs.

In short, one of my biggest takeaways from 2019 is that researchers in particular should be conscious about how we develop models and try to build systems that are interpretable-by-design whenever possible. I am interested in such applications of recent work of mine and hope that an increasing portion of the community makes the design of such systems a priority.

Continued Growth of Simulation Tools and Progress in Sim-to-Real

Simulation is an incredibly useful tool, since data is cheap and effectively infinite, if not particularly diverse. Last year (2018) saw an explosion of simulated tools, many of them providing photorealistic images from simulated real-world environments and aimed at being directly useful for enabling real-world capabilities; these environments included InteriorNet: Mega-scale Multi-sensor Photo-realistic Indoor Scenes Dataset and the fantastic GibsonEnv, which includes “572 full buildings composed of 1447 floors covering a total area of 211k [square meters]”. This year has seen continued growth in this space, including a new, interactive Gibson Environment and Facebook’s gorgeous AI Habitat environment:

There’s also been continued interest in using video games as platforms for studying AI. Most recently Facebook has open sourced CraftAssist: “a platform for studying collaborative AI bots in Minecraft”.

There’s also been continued interest in using video games as platforms for studying AI. Most recently Facebook has open sourced CraftAssist: “a platform for studying collaborative AI bots in Minecraft”.

There are an ever-increasing number of technologies for using simulation tools to enable good performance in the real world. Admittedly, I’ve never been totally sold on the promise of domain randomization, in which elements of a simulated scene (texture, lighting, color, etc.) are randomly changed so that the learning algorithm learns to ignore those often irrelevant details. For many robotics applications, specific textures and lighting may actually matter for planning, and domain-specific techniques may be more appropriate and randomization, like some data augmentation procedures, may introduce problems of its own. That being said, recent efforts—including Sim-to-Real via Sim-to-Sim—and the widespread use of these techniques to improve performance throughout various subfields this past year are starting to convince me of its general utility. OpenAI also used domain randomization over both visual appearance and physics to learn to manipulate a Rubik’s Cube, thus proving their robot hand more dextrous than I am. Beyond randomization, domain adaptation procedures, which actively transfer knowledge between domains, have also seen some progress in the last year. I am particularly interested in work like Closing the Sim-to-Real Loop: Adapting Simulation Randomization with Real World Experience, in which a handful of real-world rollouts allow an RL agent to adapt its experience from simulation.

Also, the 2019 RSS conference had a full day workshop devoted to “Closing the Reality Gap in Sim2Real Transfer for Robotic Manipulation” that’s worth looking at if you’re interested.

Also, the 2019 RSS conference had a full day workshop devoted to “Closing the Reality Gap in Sim2Real Transfer for Robotic Manipulation” that’s worth looking at if you’re interested.

Bittersweet Lessons

No discussion of AI in 2019 would be complete without a mention of the debate surrounding “The Bitter Lesson”. In March, Rich Sutton (a famous and well-respected researcher in AI) published a post to his website in which he talks about how, repeatedly over the course of AI’s history and his career, model-based methods—in which rigid structure is hand-designed by humans—have been overtaken by model-free methods, like Deep Learning. He cites as one example of this “SIFT” features for object detection: though they were the state-of-the-art for 20 years, Deep Learning has blown all of those results out of the water. He goes on:

This is a big lesson. As a field, we still have not thoroughly learned it, as we are continuing to make the same kind of mistakes. To see this, and to effectively resist it, we have to understand the appeal of these mistakes. We have to learn the bitter lesson that building in how we think we think does not work in the long run. The bitter lesson is based on the historical observations that 1) AI researchers have often tried to build knowledge into their agents, 2) this always helps in the short term, and is personally satisfying to the researcher, but 3) in the long run it plateaus and even inhibits further progress, and 4) breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning. The eventual success is tinged with bitterness, and often incompletely digested, because it is success over a favored, human-centric approach.

One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning.

His perspective spawned much debate in the AI research community, and some incredibly engaging rebuttals from the likes of Rodney Brooks and Max Welling. My take? There are always prior assumptions baked into our learning algorithms and we are only just scratching the surface of understanding how our data and learning representations translate into an ability to generalize. This is one of the reasons I am so excited by representation learning and by research at the intersection of deep learning and classical planning techniques. Only through being explicit about how we encode an agent’s ability to reuse its knowledge can we hope to achieve trustworthy generalization on complex, multi-sequence planning tasks. We should expect AI to exhibit combinatorial generalization, as humans do, in which we can achieve effective generalization without the need for exponentially growing datasets.

Conclusion

With as much progress as there was in 2019, there are yet areas ripe for growth in the coming years. I’d like to see more applications to Partially Observable Domains, which require that an agent have a deep understanding of its environment so that it may make predictions about the future (this is something I’m actively working on). I’m also interested in seeing more progress in so-called long-lived AI: systems that continue to learn and grow as they spend more time interacting with their surroundings. For now, many systems that interact with the world have a tough time handling noise in an elegant way and, except for the simplest of applications, most learned models will break down as the number of sensor observations grows.

As I mentioned earlier, I welcome discussion: let me know if there’s anything you thought I missed or feel strongly about. Feel free to drop me a line or comment on this review in the comments below or on Hacker News.

Wishing you all health and happiness in 2020!

References

- David Silver et al., A general reinforcement learning algorithm that masters Chess, Shogi, and Go through self-play, Science, 2018.

- Julian Schrittwieser, Ioannis Antonoglou, Thomas Hubert, Karen Simonyan, Laurent Sifre, Simon Schmitt, Arthur Guez, Edward Lockhart, Demis Hassabis, Thore Graepel, Timothy Lillicrap & David Silver, Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model, in: Advances in Neural Information Processing Systems (NeurIPS), 2019.

- Jonathan Frankle & Michael Carbin, The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks, in: International Conference on Learning Representations, 2019.

- Diana Wofk, Fangchang Ma, Tien-Ju Yang, Sertac Karaman & Vivienne Sze, FastDepth: Fast Monocular Depth Estimation on Embedded Systems, in: International Conference on Robotics and Automation (ICRA), 2019.

- Yi Wu, Yuxin Wu, Aviv Tamar, Stuart Russell, Georgia Gkioxari & Yuandong Tian, Bayesian Relational Memory for Semantic Visual Navigation, in: International Conference on Computer Vision (ICCV), 2019.

- Rishi Veerapaneni, John D. Co-Reyes, Michael Chang, Michael Janner, Chelsea Finn, Jiajun Wu, Joshua B. Tenenbaum & Sergey Levine, Entity Abstraction in Visual Model-Based Reinforcement Learning, in: Conference on Robot Learning (CoRL), 2019.

- Peter W. Battaglia et al., Relational inductive biases, deep learning, and graph networks, arXiv preprint arXiv:1806.01261, 2018.

- Fanfei Chen, Jinkun Wang, Tixiao Shan & Brendan Englot, Autonomous Exploration Under Uncertainty via Graph Convolutional Networks, in: International Symposium on Robotics Research (ISRR), 2019.

- Virginia Eubanks, Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor, 2018.

- Chaofan Chen, Oscar Li, Alina Barnett, Jonathan Su & Cynthia Rudin, This Looks Like That: Deep Learning for Interpretable Image Recognition, in: Neural Information Processing Systems (NeurIPS), 2019.

- Fei Xia et al., Gibson Env V2: Embodied Simulation Environments for Interactive Navigation, 2019.

- Stephen James, Paul Wohlhart, Mrinal Kalakrishnan, Dmitry Kalashnikov, Alex Irpan, Julian Ibarz, Sergey Levine, Raia Hadsell & Konstantinos Bousmalis, Sim-to-Real via Sim-to-Sim: Data-efficient Robotic Grasping via Randomized-to-Canonical Adaptation Networks, in: Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- OpenAI et al., Solving Rubik's Cube with a Robot Hand, arXiv preprint arXiv:1910.07113, 2019.